Optimal CPU concurrency is a key concept in maximizing the performance of multi-threaded applications, especially in resource-intensive scenarios like machine learning tasks. By understanding and leveraging CPU core types, developers can enhance their software’s multi-threading performance significantly. Tools such as Firefox ML performance harness the capabilities of technologies like SharedArrayBuffer, enabling seamless execution across multiple threads. Additionally, APIs like navigator.hardwareConcurrency provide essential insights into the number of logical cores available, guiding programmers to adjust their thread counts effectively. Ultimately, striking the right balance in CPU concurrency not only improves application efficiency but also optimizes memory usage, contributing to a smoother user experience.

When discussing the balance of processing power in computational tasks, terms such as CPU parallelism and thread management come into play. Efficiently distributing workloads across various CPU types can lead to enhanced multi-core performance, particularly in computation-heavy environments like artificial intelligence. Exploring methods to achieve optimal CPU concurrency can significantly impact how well applications run, particularly those relying on advanced features such as SharedArrayBuffer. Utilizing tools that provide clarity on available system resources, such as navigator.hardwareConcurrency, allows developers to fine-tune the threading in their applications. Overall, understanding these underlying principles is critical for any developer looking to optimize their software’s performance.

Understanding CPU Concurrency in ML Tasks

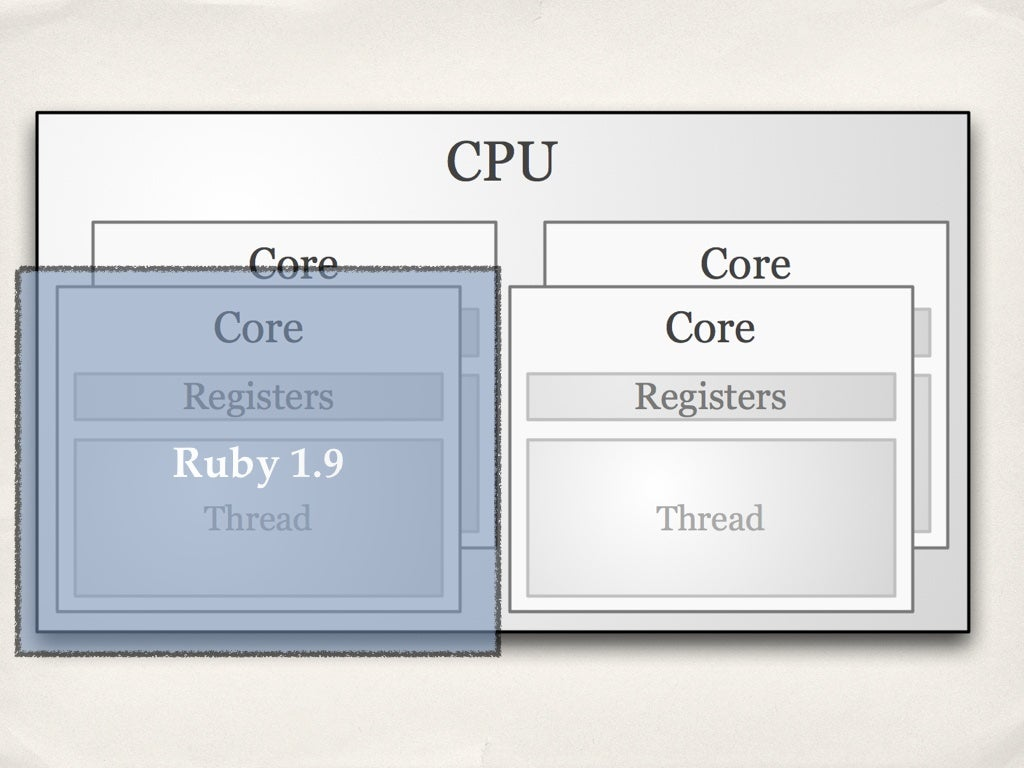

In the realm of machine learning workloads, understanding CPU concurrency becomes crucial for optimizing performance. The term ‘CPU concurrency’ refers to the ability of a CPU to execute multiple threads simultaneously. By effectively utilizing concurrency, machine learning operations can significantly enhance processing speeds. For instance, using high-performance cores to handle demanding computations while allowing efficiency cores to manage background tasks fosters better resource allocation. This results in an improved balance between power consumption and performance, especially critical for intensive tasks like those executed within the Firefox AI Runtime.

Furthermore, achieving optimal CPU concurrency requires a nuanced understanding of the underlying hardware architecture. By leveraging features like navigator.hardwareConcurrency, developers can gauge the number of logical cores a CPU offers. However, blindly relying on this number may lead to over-committing threads, potentially degrading performance. For maximum effectiveness, it’s important to align the number of threads with the specific characteristics of CPU core types. This leads to enhanced multi-threading performance, crucial for workloads including machine learning tasks that handle large datasets like those found in PDF.js.

Exploring CPU Core Types and Their Impact

The types of CPU cores directly impact the efficiency of thread execution in multi-threaded applications. For example, modern CPUs often contain a mix of performance (P-cores) and efficiency cores (E-cores). Performance cores are designed to handle high-demand tasks, whereas efficiency cores manage less intensive operations. When configuring the concurrency for ML tasks, it’s vital to prioritize the utilization of P-cores, maximizing their potential while allowing E-cores to assist in less demanding duties. Mismanagement of this balance can result in poor thread scheduling and diminished performance.

For instance, on systems using AMD’s latest architectures, the absence of dedicated efficiency cores means that all cores are performance-capable. This can lead to more effective utilization of CPU resources, particularly for tasks requiring intensive parallel processing. Understanding the distinctions among CPU core types allows developers to tailor their applications strategically, enhancing both speed and efficiency when running machine learning models such as those in Firefox. With this knowledge, developers can better navigate the complexities inherent in multi-threaded environments.

Harnessing SharedArrayBuffer for Improved Performance

The SharedArrayBuffer API plays a pivotal role in improving concurrency for applications running on JavaScript environments, especially in contexts like the Firefox ML Runtime. By allowing multiple threads to access a shared memory space, SharedArrayBuffer facilitates more efficient communication and data sharing among threads. This capability is essential for machine learning tasks that often involve simultaneous data processing, making it a powerful tool in optimizing multi-threading performance.

However, developers need to be cautious about using SharedArrayBuffer effectively. While it can lead to performance boosts, there are potential pitfalls, such as race conditions and data integrity issues when multiple threads operate on the same data. Thus, while integrating SharedArrayBuffer into applications can yield better concurrency results, it necessitates a well-thought-out approach to avoid compromising performance gains. With careful implementation, leveraging SharedArrayBuffer can significantly enhance the efficiency of inference processes within the Firefox ML ecosystem.

The Role of navigator.hardwareConcurrency in Optimization

The navigator.hardwareConcurrency API provides developers with insight into the logical core count available on a user’s device. Although this can serve as a useful starting point for configuring thread counts in multi-threaded applications, relying solely on its output may not yield optimal results. For instance, using the default logical cores without considering the types of threads being executed can lead to overcommitment and inefficient performance. A more refined approach involves evaluating not only the number of logical cores but also the core types—such as performance and efficiency cores—to inform concurrency decisions.

A common approach to mitigate this overcommitment issue involves modifying the basic output of navigator.hardwareConcurrency. For instance, it’s typical to divide the logical core count by two, as excess threads may lead to performance degradation due to thread contention. This tailored configuration allows developers to maintain an efficient throughput, especially in resource-intensive applications such as machine learning models. Moreover, as a result of evolving hardware architectures, leveraging navigator.hardwareConcurrency in conjunction with insights on available core types will lead to more intelligent thread management.

Strategies for Optimal Multi-Threading Performance

Achieving optimal multi-threading performance in machine learning tasks involves carefully strategizing the number of threads in relation to physical and logical core counts. Depending on the architecture—such as Intel’s Hyper-Threading or ARM’s big.LITTLE setups—this optimization can vary drastically. Developers need to assess their workloads and determine the balance between speed and resource utilization.

One practical approach is to experiment with various concurrency levels while monitoring performance metrics closely. Tools that analyze the relationship between thread count and execution time can help identify the sweet spot for optimal CPU concurrency. By adjusting the number of active threads in response to the type of complexity of the ML tasks at hand, developers can significantly improve their application performance while avoiding pitfalls related to excessive memory usage and processing delays.

The Importance of CPU Architecture Awareness

Awareness of CPU architecture is fundamental for developers aiming to boost the performance of multi-threaded applications. Different architectures, such as Apple’s M1 Pro with its unique blend of performance and efficiency cores, or AMD’s Ryzen series, come with distinct characteristics that must be understood when planning concurrency. For example, the presence of unique cores in Apple Silicon allows for more tailored execution of threads based on task demands.

Moreover, given the increasing complexity in CPU designs, such as Intel’s new hybrid architecture, developers must remain informed about these changes to ensure their applications are truly optimized. Emphasizing an architecture-aware approach allows for better decision-making when building software solutions that leverage multi-threading, ultimately maximizing the capabilities of modern CPUs for tasks in the Firefox ML Runtime and beyond.

Analyzing Performance Across Diverse Hardware

In the pursuit of efficient performance in multi-threaded applications, analyzing the behavior of algorithms across different hardware configurations is crucial. The diversity of user devices—from Intel-based systems to Apple Silicon—creates a need for tailored performance assessments. By employing a range of hardware profiles in performance CI, developers can gather valuable insights about how their software performs under varying conditions and architectures.

Understanding how specific CPUs handle concurrency offers developers the potential to fine-tune their applications for optimal performance. This entails not only examining CPU core types but also considering associated metrics such as memory usage and execution time. By collecting comprehensive performance data across different CPUs, errors and inefficiencies can be promptly addressed, thus ensuring users experience the best possible performance in their tasks.

Navigating Thread Management Challenges

Thread management can often become a complex ordeal, particularly in environments with limited resources. For advanced ML tasks, the potential memory overhead induced by unnecessary thread creation can significantly hinder performance. Therefore, establishing appropriate thread management strategies is imperative to efficiently allocate CPU cores and resources.

One effective strategy involves dynamically adjusting the concurrency based on the model’s workload and the available hardware. Implementing logic that evaluates system capacity in real time allows applications to adapt their thread counts appropriately. This adaptability helps prevent performance declines often associated with thread over-commitment, thus paving the way toward more efficient execution of machine learning tasks.

Future-Proofing ML Task Optimization with New APIs

As advancements in CPU technology continue to emerge, developers must stay ahead by adopting new APIs that enhance multi-threading performance. For instance, as Firefox transitions from using navigator.hardwareConcurrency to more precise methods, the ability to assess the optimal number of active threads will evolve significantly. New standards in API design can offer clearer insights into core capabilities and workload management, ultimately enabling better performance yields.

By embracing upcoming APIs and changes in CPU designs, developers can future-proof their applications, ensuring they leverage the latest processor features. This proactive approach will not only provide a competitive advantage but also align with the evolving landscape of hardware capabilities, allowing for seamless integration of multi-threading approaches in machine learning tasks within the Firefox ecosystem.

Frequently Asked Questions

What is optimal CPU concurrency and how does it affect multi-threading performance?

Optimal CPU concurrency refers to the ideal number of threads that can be run simultaneously to achieve the best performance without overcommitting CPU resources. In multi-threading, this means balancing the workload across physical and logical cores to prevent performance degradation. Over-committing threads can lead to an increase in thread scheduling overhead and cache contention, which can ultimately slow down execution times. Understanding the configuration of your CPU cores, such as utilizing only performance cores while avoiding efficiency cores, is crucial for maximizing multi-threading performance.

How can navigator.hardwareConcurrency help in determining optimal CPU concurrency?

The navigator.hardwareConcurrency API provides the number of logical cores available on a system, serving as a rough estimate for optimal CPU concurrency. However, relying solely on this value can lead to poor performance due to overcommitting threads, particularly on systems with different core types. It’s important to combine this information with assessments of physical cores and current system activity to truly optimize concurrency for specific workloads.

What role do CPU core types play in determining optimal CPU concurrency?

Different CPU core types, such as performance and efficiency cores, play a significant role in determining optimal CPU concurrency. For instance, high-performance cores are better suited for demanding tasks like ML inference, while efficiency cores handle lighter workloads. To achieve optimal CPU concurrency, it is essential to utilize the performance cores maximally, as running tasks on efficiency cores can lead to diminished returns and slower execution.

Why does increasing the number of threads sometimes cause performance drops in Firefox ML performance?

Increasing the number of threads in Firefox ML performance can lead to performance drops because of factors such as thread scheduling overhead and cache contention. When too many threads run concurrently, especially if they exceed the number of available physical cores, the CPU struggles to manage them efficiently, which can result in longer execution times. Therefore, finding the optimal concurrency level under varying workloads is crucial for maintaining high performance.

How does SharedArrayBuffer facilitate optimal CPU concurrency in multi-threading applications?

SharedArrayBuffer allows multiple threads to access a shared memory space, thus enabling concurrent operations on its content. In multi-threading applications, such as those running in the Firefox AI Runtime, utilizing SharedArrayBuffer can significantly enhance CPU concurrency by distributing processing loads across available CPU cores. This enables faster data handling and improved performance, provided the number of threads is optimized according to the machine’s specifications.

What adjustments should be made when using AMD, Intel, or Apple CPUs for optimal CPU concurrency?

When utilizing AMD, Intel, or Apple CPUs for optimal CPU concurrency, it’s important to consider the architecture of the CPU cores. For Intel and AMD, focus on maximizing performance cores while avoiding overcommitment on efficiency cores. For Apple CPUs, prioritize high-performance cores for computational tasks and reserve efficiency cores for background processes. Each CPU manufacturer has a different approach to core design, so tailoring the thread count based on the specific core configurations will yield better multi-threading performance.

| Core Type | Description | Examples |

|---|---|---|

| Physical Cores | The actual hardware cores in the CPU. | MacBook M1 Pro (10 cores), Intel i9-10900K (10 cores) |

| Logical Cores | Threads that can run concurrently, often more than physical cores due to technologies like Hyper-Threading. | Intel Core i9-10900K (20 threads), AMD Ryzen 7 5800X (16 threads) |

| Performance Cores (P-Cores) | High-performance cores designed for demanding tasks, suitable for complex computations. | Apple M1 Pro (8 P-Cores), Intel Alder Lake (mixed architecture) |

| Efficiency Cores (E-Cores) | Lower power cores designed for less demanding tasks to optimize power consumption. | Apple M1 Pro (2 E-Cores), Intel Alder Lake (for lighter workloads) |

| Specialized Cores | Cores that serve specific functions, like GPUs or NPUs, that can handle machine learning tasks. | NVIDIA GPUs, specialized NPUs in mobile devices |

Summary

Optimal CPU concurrency is pivotal in enhancing performance during CPU-intensive tasks, particularly in machine learning operations. As we evaluate the advantages of leveraging both physical and logical cores, it becomes evident that striking the right balance in thread usage is essential. Optimal CPU concurrency allows us to maximize efficiency while avoiding the pitfalls of overcommitting threads, which can lead to decreased performance due to resource contention. Understanding the distinctions between core types and their roles in various tasks will empower developers to harness their CPUs more effectively.